In the wide world of artificial intelligence (AI), the term "black boxes" has resonated strongly. Often, when talking about machine learning models, we come across this expression. But what does it really mean? A black box is a system whose inner workings are opaque to the observer; we know what goes in and what comes out, but the "how" remains hidden. This analogy has been applied to AI models to express how, at times, their internal decision-making processes are incomprehensible to humans.

Why has this term become especially popular in the data science community? The answer leads to an interesting crossover between technology and human perception. Interestingly, many of the early popularizers of this term were people with no or only a superficial understanding of programming. This phenomenon is no coincidence: encountering systems that produced impressive results but whose internal logic was inaccessible, a narrative of awe and sometimes suspicion towards these "mysterious machines" was born.

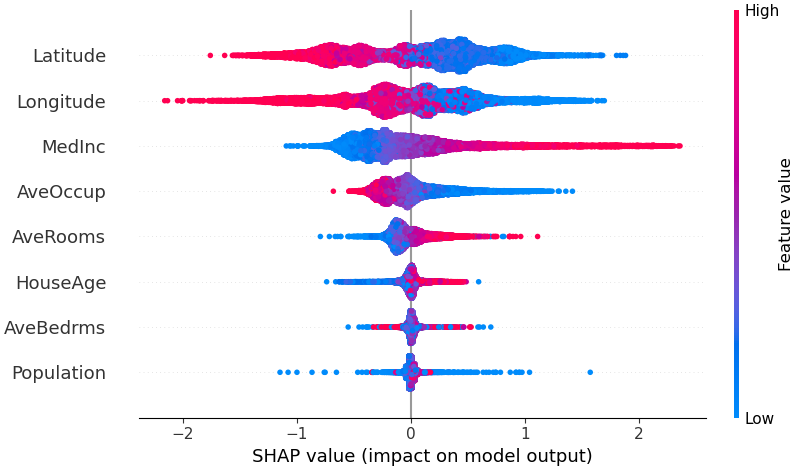

But is it possible to understand what these models learned? Yes, there are methods and tools specifically designed to unravel the mysteries of these models. A prime example is Shap values, which allow us to interpret the contribution of each variable to the prediction of a machine learning model.

In this image what we have to deduce is that the higher the data is the more important it is in the prediction, the color refers to how it impacts the result, for example for Latitude the smaller it is (the redder to the left), the greater the value of what we want to predict, different case for AveRooms the redder it is to the right, the higher the predicted value.

What we see here is a graph of forces, everything in red pushes the predicted value to be large and what is in blue influences to have a smaller value in the prediction.

In the specific field of deep learning, interpretation tools have also advanced significantly. The Grad-CAM or VIZGRIDCAM library, for example, allows us to visualize which parts of an image most influence the decision of a convolutional neural network, thus providing visual clues about its "thinking". These types of tools open a window into understanding how these networks process and make decisions based on the data.to summarize if I have the task of classifying a cougar from a cat, the first deduction may be the size but if it is in a photo and I don't have proportion of the bodies this is where these tools become more than powerful because with a heat map it tells us what area of the body we have to look at to find these differences.

In the top row we have the raw images and in the bottom row we have the same photo after being processed by this library, please pay attention to the heat map, it tells us which area of the image to focus on in order to classify each of the objects to be detected.

Moreover, for those who prefer a more mathematical approach, research such as that presented in Symbolic Neural Networks offers methods for translating complex neural networks into understandable equations.

What about Large Language Modeling (LML) models such as GPT? Here we enter even more complex and fascinating territory. Interpreting and understanding these models represents a different challenge and will be the focus of our next note.

Comments